In recent years, large language models (LLMs) have made remarkable strides in their ability to understand and generate human-like text. These models, such as OpenAI’s GPT and Anthropic’s Claude, have demonstrated impressive performance on a wide range of natural language processing tasks. However, when it comes to complex reasoning tasks that require multiple steps of logical thinking, traditional prompting methods often fall short. This is where Chain-of-Thought (CoT) prompting comes into play, offering a powerful prompt engineering technique to improve the reasoning capabilities of large language models.

Key Takeaways

- CoT prompting enhances reasoning capabilities by generating intermediate steps.

- It breaks down complex problems into smaller, manageable sub-problems.

- Benefits include improved performance, interpretability, and generalization.

- CoT prompting applies to arithmetic, commonsense, and symbolic reasoning.

- It has the potential to significantly impact AI across diverse domains.

Chain-of-Thought prompting is a technique that aims to enhance the performance of large language models on complex reasoning tasks by encouraging the model to generate intermediate reasoning steps. Unlike traditional prompting methods, which typically provide a single prompt and expect a direct answer, CoT prompting breaks down the reasoning process into a series of smaller, interconnected steps.

At its core, CoT prompting involves prompting the language model with a question or problem and then guiding it to generate a chain of thought – a sequence of intermediate reasoning steps that lead to the final answer. By explicitly modeling the reasoning process, CoT prompting enables the language model to tackle complex reasoning tasks more effectively.

One of the key advantages of CoT prompting is that it allows the language model to decompose a complex problem into more manageable sub-problems. By generating intermediate reasoning steps, the model can break down the overall reasoning task into smaller, more focused steps. This approach helps the model maintain coherence and reduces the chances of losing track of the reasoning process.

CoT prompting has shown promising results in improving the performance of large language models on a variety of complex reasoning tasks, including arithmetic reasoning, commonsense reasoning, and symbolic reasoning. By leveraging the power of intermediate reasoning steps, CoT prompting enables language models to exhibit a deeper understanding of the problem at hand and generate more accurate and coherent responses.

Standard vs COT prompting (Wei et al., Google Research, Brain Team)

CoT prompting works by generating a series of intermediate reasoning steps that guide the language model through the reasoning process. Instead of simply providing a prompt and expecting a direct answer, CoT prompting encourages the model to break down the problem into smaller, more manageable steps.

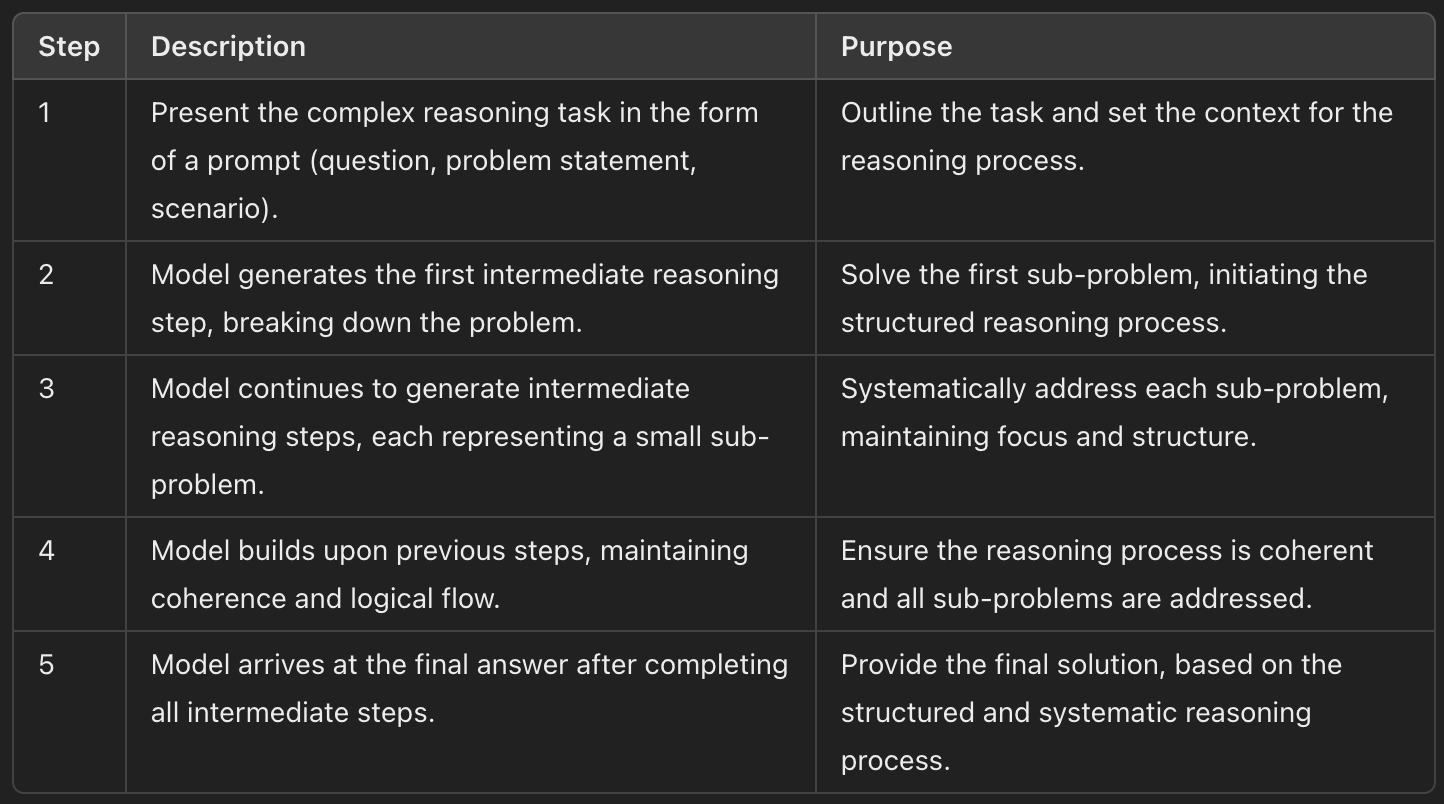

The process begins by presenting the language model with a prompt that outlines the complex reasoning task at hand. This prompt can be in the form of a question, a problem statement, or a scenario that requires logical thinking. Once the prompt is provided, the model generates a sequence of intermediate reasoning steps that lead to the final answer.

Each intermediate reasoning step in the chain of thought represents a small, focused sub-problem that the model needs to solve. By generating these steps, the model can approach the overall reasoning task in a more structured and systematic manner. The intermediate steps allow the model to maintain coherence and keep track of the reasoning process, reducing the chances of losing focus or generating irrelevant information.

As the model progresses through the chain of thought, it builds upon the previous reasoning steps to arrive at the final answer. Each step in the chain is connected to the previous and subsequent steps, forming a logical flow of reasoning. This step-by-step approach enables the model to tackle complex reasoning tasks more effectively, as it can focus on one sub-problem at a time while still maintaining the overall context.

The generation of intermediate reasoning steps in CoT prompting is typically achieved through carefully designed prompts and training techniques. Researchers and practitioners can use various methods to encourage the model to produce a chain of thought, such as providing examples of step-by-step reasoning, using special tokens to indicate the start and end of each reasoning step, or fine-tuning the model on datasets that demonstrate the desired reasoning process.

5-Step COT prompting process

By guiding the language model through the reasoning process using intermediate steps, CoT prompting enables the model to solve complex reasoning tasks more accurately and efficiently. The explicit modeling of the reasoning process also enhances the interpretability of the model’s outputs, as the generated chain of thought provides insights into how the model arrived at its final answer.

CoT prompting has been successfully applied to a variety of complex reasoning tasks, demonstrating its effectiveness in improving the performance of large language models.

Let’s explore a few examples of how CoT prompting can be used in different domains.

Arithmetic Reasoning

One of the most straightforward applications of CoT prompting is in arithmetic reasoning tasks. By generating intermediate reasoning steps, CoT prompting can help language models solve multi-step arithmetic problems more accurately.

For example, consider the following problem:

"If John has 5 apples and Mary has 3 times as many apples as John, how many apples does Mary have?"

Using CoT prompting, the language model can generate a chain of thought like this:

John has 5 apples.Mary has 3 times as many apples as John.To find the number of apples Mary has, we need to multiply John's apples by 3.5 apples × 3 = 15 applesTherefore, Mary has 15 apples.

By breaking down the problem into smaller steps, CoT prompting enables the language model to reason through the arithmetic problem more effectively.

Commonsense Reasoning

CoT prompting has also shown promise in tackling commonsense reasoning tasks, which require a deep understanding of everyday knowledge and logical thinking.

For instance, consider the following question:

"If a person is allergic to dogs and their friend invites them over to a house with a dog, what should the person do?"

A language model using CoT prompting might generate the following chain of thought:

The person is allergic to dogs.The friend's house has a dog.Being around dogs can trigger the person's allergies.To avoid an allergic reaction, the person should decline the invitation.The person can suggest an alternative location to meet their friend.

By generating intermediate reasoning steps, CoT prompting allows the language model to demonstrate a clearer understanding of the situation and provide a logical solution.

Symbolic Reasoning

CoT prompting has also been applied to symbolic reasoning tasks, which involve manipulating and reasoning with abstract symbols and concepts.

For example, consider the following problem:

"If A implies B, and B implies C, does A imply C?"

Using CoT prompting, the language model can generate a chain of thought like this:

A implies B means that if A is true, then B must also be true.B implies C means that if B is true, then C must also be true.If A is true, then B is true (from step 1).If B is true, then C is true (from step 2).Therefore, if A is true, then C must also be true.So, A does imply C.

By generating intermediate reasoning steps, CoT prompting enables the language model to handle abstract symbolic reasoning tasks more effectively.

These examples demonstrate the versatility and effectiveness of CoT prompting in improving the performance of large language models on complex reasoning tasks across different domains. By explicitly modeling the reasoning process through intermediate steps, CoT prompting enhances the model’s ability to tackle challenging problems and generate more accurate and coherent responses.

Benefits of Chain-of-Thought Prompting

Chain-of-Thought prompting offers several significant benefits in advancing the reasoning capabilities of large language models. Let’s explore some of the key advantages:

Improved Performance on Complex Reasoning Tasks

One of the primary benefits of CoT prompting is its ability to enhance the performance of language models on complex reasoning tasks. By generating intermediate reasoning steps, CoT prompting enables models to break down intricate problems into more manageable sub-problems. This step-by-step approach allows the model to maintain focus and coherence throughout the reasoning process, leading to more accurate and reliable results.

Studies have shown that language models trained with CoT prompting consistently outperform those trained with traditional prompting methods on a wide range of complex reasoning tasks. The explicit modeling of the reasoning process through intermediate steps has proven to be a powerful technique for improving the model’s ability to handle challenging problems that require multi-step reasoning.

Enhanced Interpretability of the Reasoning Process

Another significant benefit of CoT prompting is the enhanced interpretability of the reasoning process. By generating a chain of thought, the language model provides a clear and transparent explanation of how it arrived at its final answer. This step-by-step breakdown of the reasoning process allows users to understand the model’s thought process and assess the validity of its conclusions.

The interpretability offered by CoT prompting is particularly valuable in domains where the reasoning process itself is of interest, such as in educational settings or in systems that require explainable AI. By providing insights into the model’s reasoning, CoT prompting facilitates trust and accountability in the use of large language models.

Potential for Generalization to Various Reasoning Tasks

CoT prompting has demonstrated its potential to generalize to a wide range of reasoning tasks. While the technique has been successfully applied to specific domains like arithmetic reasoning, commonsense reasoning, and symbolic reasoning, the underlying principles of CoT prompting can be extended to other types of complex reasoning tasks.

The ability to generate intermediate reasoning steps is a fundamental skill that can be leveraged across different problem domains. By fine-tuning language models on datasets that demonstrate the desired reasoning process, CoT prompting can be adapted to tackle novel reasoning tasks, expanding its applicability and impact.

Facilitating the Development of More Capable AI Systems

CoT prompting plays a crucial role in facilitating the development of more capable and intelligent AI systems. By improving the reasoning capabilities of large language models, CoT prompting contributes to the creation of AI systems that can tackle complex problems and exhibit higher levels of understanding.

As AI systems become more sophisticated and are deployed in various domains, the ability to perform complex reasoning tasks becomes increasingly important. CoT prompting provides a powerful tool for enhancing the reasoning skills of these systems, enabling them to handle more challenging problems and make more informed decisions.

A Quick Summary

CoT prompting is a powerful technique that enhances the reasoning capabilities of large language models by generating intermediate reasoning steps. By breaking down complex problems into smaller, more manageable sub-problems, CoT prompting enables models to tackle challenging reasoning tasks more effectively. This approach improves performance, enhances interpretability, and facilitates the development of more capable AI systems.

FAQ

How does Chain-of-Thought prompting (CoT) work?

CoT prompting works by generating a series of intermediate reasoning steps that guide the language model through the reasoning process, breaking down complex problems into smaller, more manageable sub-problems.

What are the benefits of using chain-of-thought prompting?

The benefits of CoT prompting include improved performance on complex reasoning tasks, enhanced interpretability of the reasoning process, potential for generalization to various reasoning tasks, and facilitating the development of more capable AI systems.

What are some examples of tasks that can be improved with chain-of-thought prompting?

Some examples of tasks that can be improved with CoT prompting include arithmetic reasoning, commonsense reasoning, symbolic reasoning, and other complex reasoning tasks that require multiple steps of logical thinking.

Credit: Source link